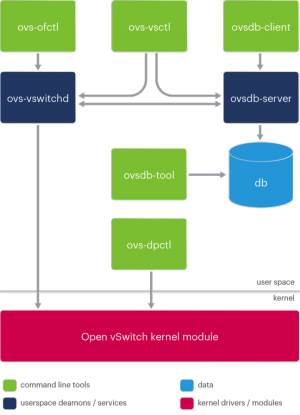

User Tools

Table of Contents

Hyper-V Open vSwitch Porting

The objective of this document is to provide an architectural overview of the porting effort of Open vSwitch (OVS) to Windows, considering in particular how it has been adapted to the Hyper-V networking model and how Linux specific user space and kernel features have been replaced with Windows equivalents.

Goals

- Porting the OVS user space tools

- Kernel features: OpenFlow, GRE, VXLAN

- Interoperability (e.g Linux and Kvm)

- Support for Windows Server and Hyper-V Server 2012, 2012 R2 and above

- Support for Hyper-V external, internal and private switches

- Seamless integration with Hyper-V networking

- Maintaining the original command line tools (CLI surface).

- PowerShell support

- Support for running OpenStack Neutron OVS agent on Windows (leading use case)

- Security

- Stability

- Performance

Non goals:

- TAP devices

- Bridge management

- Cygwin

Additional features will be evaluated for subsequent releases.

Integration with Hyper-V networking

One of the main challenges is maintaining consistence with both Hyper-V and OVS networking models, with an UX familiar to both Hyper-V and OVS users.

The Hyper-V networking configuration differs from OVS in Linux virtualization environments (e.g. KVM) in key areas, where the most prominent one is the usage of virtual switches and virtual switch ports versus bridges and tap devices.

Hyper-V Server 2012 introduced an extensible switch model, providing a degree of flexibility matching our scenario. Instead of creating a completely parallel networking stack, matching both environments by the means of a NDIS forwarding extension can serve our purposes.

The alternative option of introducing tap devices on Windows to match the OVS Linux networking architectural counterpart can be avoided due to lack of any benefit in our scenario, although widely employed by some popular networking solutions, e.g.: https://github.com/OpenVPN/tap-windows

From a Hyper-V perspective there's the need to tag a Hyper-V switch port in order to provide correlation with an OVS port, which can be accomplished by using a WMI class used to bind a VM network adapter with a vswitch port (Msvm_EthernetPortAllocationSettingData). An alternative would require the creation of an additional WMI provider to support the mapping.

From a usage perspective, associating a VM adapter with a port requires a very simple PowerShell cmdlet execution. All the remaining port configurations (e.g. GRE, VXLAN, flow rules, etc) are performed in OVS. The actual packet filtering and forwarding is carried out in the kernel extension.

ovs-vsctl.exe add-port br0 port1 Get-VMNetworkAdapter VM1 | Set-VMNetworkAdapterOVSPort -OVSPortName port1

The OVS Hyper-V extension can be enabled with the following standard Hyper-V cmdlet for one or more virtual switches:

Enable-VMSwitchExtension openvswitch -VMSwitchName external

OpenFlow rules can be added as well as required:

ovs-ofctl.exe add-flow tcp:127.0.0.1:6633 actions=drop

The following diagram summarized the basic concepts introduced in this paragraph:

User space porting challenges

Like many other projects born on Linux and designed primarily with Linux / Posix scenarios in mind, OVS includes various areas with tight coupling with the OS features, which require the introduction of alternative code paths or a proper refactoring to provide an appropriate abstraction layer.

The following list includes the main critical areas encountered during the porting.

Build system

OVS uses Autoconf / Automake, which can be used with MinGW on Windows. We added CMake configuration files in parallel to support Visual Studio 2013 builds. Visual Studio is not a strict requirement from a build perspective, but is highly suggested to obtain appropriate development and troubleshooting productivity due to modern IDE features otherwise not available in MinGW.

C99

OVS is written entirely in C, including the usage of C99 features. VS 2013 includes an array of additional C99 features previously not available that helped in easing the porting from a C language perspective. There are unfortunately still a few unsupported features that required workarounds. Most of these issues have been addressed in the Cloudbase port and subsequently in the OVS userspace code repository master branch thanks to community efforts.

- “%zu” formatting missing in printf() and other standard library functions

- snprintf() not available

- #include_next not available

- gettimeofday() not available

- Various minor #defines for constants and or function synonyms

Features that required mapping to different APIs or alternative solutions:

- Netlink interface not available. This is detailed in a separate paragraph further on.

- Replace Unix sockets with TCP/IP, as this required the minimum set of changes in the codebase. Named pipes should be considered as well to improve performance.

- SIGHUP, SIGINT and other signals are not available

- Map socket features to the WAS equivalents. poll() in particular is not available.

- Replace cryptographic routines with CryptoApi equivalents

- getopt not available

- Linux daemons (ovs-vswitchd and ovsdb-server) need to be executed as Windows services

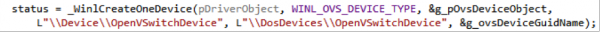

Netlink replacement

Linux provides a socket based abstraction called Netlink to simplify user space / kernel communication. This feature is not available on Windows and has been replaced with the implementation of an ad hoc layer based on IoCreateDevice / CreateFile / ReadFile / WriteFile APIs. The user space part has been encapsulated in an API interface semantically identical to Netlink, to limit the amount of changes required in the OVS codebase.

Kernel:

User space (ovs-vswitchd and ovs-dpctl):

Packets sent from user space are identified based on the sending handles and queued for processing to be subsequently consumed by the appropriate handlers.

The switch MAC-learning feature employs communication over this channel, which means that the related bandwidth implications must be taken into consideration.

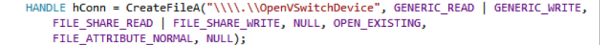

Kernel NDIS Hyper-V extension

While the user space OVS components are responsible for the switch and flow configuration, the actual networking and encapsulation is managed by an NDIS Hyper-V forwarding extension.

The main roles of this extension are:

- Communication with ovs-vswitchd for configuration management and monitoring, as detailed in the Netlink paragraph above.

- Matching Hyper-V ports to OVS ports to identify the proper sources and destinations.

- Applying OpenFlow rules on the ingress / egress datapaths. This includes:

- Filtering

- Packet manipulation

- GRE and VXLAN tunneling

- Easy extendible to other encapsulation options (e.g. Geneve)

- VLAN tagging (optionally, as 802.1q is already supported by Hyper-V networking)

The extension contains all the low level packet manipulation wich is required for the supported protocols (TCP, UDP, ICMP, etc) on both IPv4 and IPv6, including GRE and VXLAN packet encapsulation / decapsulation for tunneling. In the GRE case, OVS uses currently Ethertype 6558 only.

Note: host tunnel endpoints are currently managed by setting AllowManagementOS to true on the Hyper-V virtual switch, thus delegating the adapter management to the OS. This requirement will be removed by managing host endpoints directly in the driver.

Hyper-V architecture diagram:

Packet flow

All packets are received on the Ingress Hyper-V datapath by the NDIS call handler SendNetBufferListsHandler.

If the extension is enabled the driver starts processing the packets by extracting the source and destination details, including: tunnel id, tunnel flags, IPv4 source and destination address, IPv4 protocol, IPv4 fragment, IPv4 time to leave, packet priority and mark, OVS input port, Ethernet source and destination, VLAN tag and type, IPv6 source and destination address, IPv6 flow label, IPv6 source and destination port, IPv6 neighbour discovery.

The packet is then matched against the Open Flow table cached in the driver and the relevant rules are applied, including decapsulation for GRE and VXLAN packets. If MAC-learning is enabled and the packet cannot be matched against existing rules, it is sent to userspace for further processing. The userspace ovs-vswitchd service processes the packet and determines the corresponding action for it, including the optional creation of a flow rule in the flowtable for this particular packet.

Packets are finally sent to their destination Hyper-V ports using the NdisFSendNetBufferLists handler.

Flow table rules and datapath statistics are updated accordingly.

Usage example

Initial OVS configuration:

ovsdb-tool.exe create conf.db vswitch.ovsschema net start ovsdb-server net start ovs-vswitchd

Hyper-V configuration:

New-VMSwitch external –AllowManagementOS $true –NetAdapterName Ethernet1 Enable-VMSwitchExtension external openvswitch # Disable "Large Send Offload" (this requirement will be removed in the future). Set-NetAdapterAdvancedProperty "vEthernet (external)" -RegistryKeyword "*LsoV2IPv4" -RegistryValue 0 Set-NetAdapterAdvancedProperty "vEthernet (external)" -RegistryKeyword "*LsoV2IPv6" -RegistryValue 0

OVS switch configuration:

ovs-vsctl.exe add-br br0 ovs-vsctl.exe add-port br0 external

Adding a GRE tunnel:

ovs-vsctl.exe add-port br0 gre-1 ovs-vsctl.exe set Interface gre-1 type=gre ovs-vsctl.exe set Interface gre-1 options:local_ip=10.13.8.4 ovs-vsctl.exe set Interface gre-1 options:remote_ip=10.13.8.3 ovs-vsctl.exe set Interface gre-1 options:in_key=flow ovs-vsctl.exe set Interface gre-1 options:out_key=flow

Adding a VXLAN tunnel:

ovs-vsctl.exe add-port br0 vxlan-1 ovs-vsctl.exe set Interface vxlan-1 type=vxlan ovs-vsctl.exe set Interface vxlan-1 options:local_ip=10.13.8.4 ovs-vsctl.exe set Interface vxlan-1 options:remote_ip=10.13.8.2 ovs-vsctl.exe set Interface vxlan-1 options:in_key=flow ovs-vsctl.exe set Interface vxlan-1 options:out_key=flow

To attach a VM to a given OVS port:

Connect-VMNetworkAdapter VM1 –SwitchName external Get-VMNeworkAdapter VM1 | Set-VMNetworkAdapterOVSPort –OVSPortName "vxlan-1"

A full example is available at: http://www.cloudbase.it/open-vswitch-on-hyper-v/

Development and build system

The kernel driver can be compiled with Microsoft Visual Studio 2013, including the freely available "Express for Windows Destkop" edition.

Beside using the Visual Studio IDE, the driver can be built in fully automated mode on the command line as well:

msbuild openvswitch.sln /m /p:Configuration="Win8.1 Release"

Automated builds are currently performed after each commit, with an additional MSI installer available to ease up the deployment: https://www.cloudbase.it/downloads/openvswitch-hyperv-installer-beta.msi

The driver must be signed with a valid Authenticode certificate in order to be deployed on non testing environments.

The Microsoft WHQL certification process can be started as soon as the upstream code merging is complete and a stable release milestone is reached.

Continuos integration tests

Continuous integration (CI) testing becomes mandatory from a practical standpoint, especially in the case in which this effort is merged in the upstream OVS repository.

CI testing benefits:

- Automated testing for each candidate patchset

- Avoiding regressions

- Kernel failures lead to kernel panics (blue screens) or OS freezing and need to be properly tested

- Peace of mind for developers not familiar with the platform (OVS devs are mostly Linux oriented)

- Multi platform testing (e.g. Windows Server 2012 / 2012 R2)

- Multiple hardware combination tests

The Hyper-V OVS CI testing framework can be hosted by Microsoft, Cloudbase Solutions or other parties interested. Microsoft already hosts the OpenStack CI testing infrastructure.

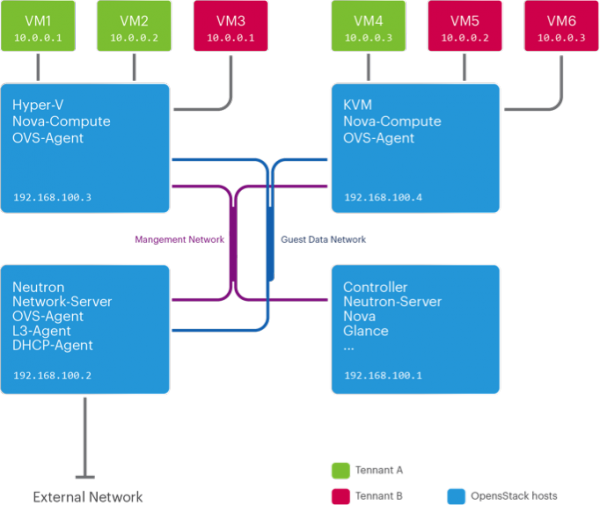

OpenStack use case

The main use case for this effort is to improve interoperability between Hyper-V and other clouds / virtualization solutions, OpenStack in particular, especially in the context of large multi-tenant cloud infrastructures.

The existing official Neutron Hyper-V agent, developed and maintained by Cloudbase Solutions as part of our ongoing OpenStack Hyper-V integration effort, offers full interoperability with flat or 802.1Q (VLAN) based networks in heterogeneous clouds (e.g. KVM, VMWare vSphere, XenServer etc), but it lacks a common ground when it comes to multi-tenant isolation based on tunneling.

VLANs are also limited in number (4095) and affect considerably the MAC learning features of the switches in large clouds, where large amounts of virtual instances are deployed.

Hyper-V offers a tunnelling solution based on NVGRE, which currently lacks interoperability with other networking stacks, while OVS became the de facto standard in most cloud deployments, especially based on OpenStack.

The Neutron OVS agent uses the OVS command line tools to apply the required L2 configurations for each instance on a given hypervisor host. By porting the user space tools we guarantee full compatibility across Linux and Windows for applying the switch configuration on a given host.

At the same time, the tunnelling features of the OVS Hyper-V extension take care of generating networking traffic fully compatible with the Linux counterparts. Multi-tenancy is also guaranteed by the network isolation obtained by using separate tunnels and appropriate OpenFlow rules.

A typical use case includes mixed Hyper-V and KVM hosts in an OpenStack deployment, as summarized by the following diagram:

Future

- Additional performance improvements

- Manage tunnel endpoints adapters in the driver to remove requirement for AllowManagementOS

- Geneve encasulation

- Hardware offload for VXLAN encapsulation

- Matching the ongoing development of new upstream userspace features

Code repositories

- Userspace tools: https://github.com/cloudbase/openvswitch-hyperv

Resources

- Installing and configuring the Hyper-V OVS extension: http://www.cloudbase.it/open-vswitch-on-hyper-v/

- Hyper-V virtual switch architecture (TechNet): http://technet.microsoft.com/en-us/library/hh831823.aspx

- Hyper-V virtual switch architecture (MSFT Blog): http://blogs.msdn.com/b/sdncorner/archive/2014/02/21/hyper-v-virtual-switch-architecture.aspx

- Hyper-V virtual switch extension APIs: http://msdn.microsoft.com/en-us/library/windows/hardware/jj673961(v=vs.85).aspx

- Visual Studio 2013 Express (free): http://www.visualstudio.com/downloads/download-visual-studio-vs#d-express-windows-desktop